In the image processing world there seem to be two options: Raster and Vector Graphics. Raster graphics works with pixels, and you pick a single color for each pixel in a vast grid. Vector images are made from simple shapes - lines, splines, boxes, gradients, and closed polygons. Vector images can easily be tweaked after creation, raster graphics not so much. But what if there was a way to take the creative flexibility of raster graphics and combine it with the editability of vector graphics.

The first question is: why are vector graphics formats limited? Why does svg only define lines, boxes and polygons with a few bonus' like gradients, shadowing and so on? There are two reasons:

- File Size. svg is XML formatted, so defining a spline takes a lot of bytes. The more detailed the curve you want to represent, the bigger the filesize goes

- When it was created in 2001, computers weren't nearly as powerful as they are now, and rendering things more complex than lines was computationally expensive

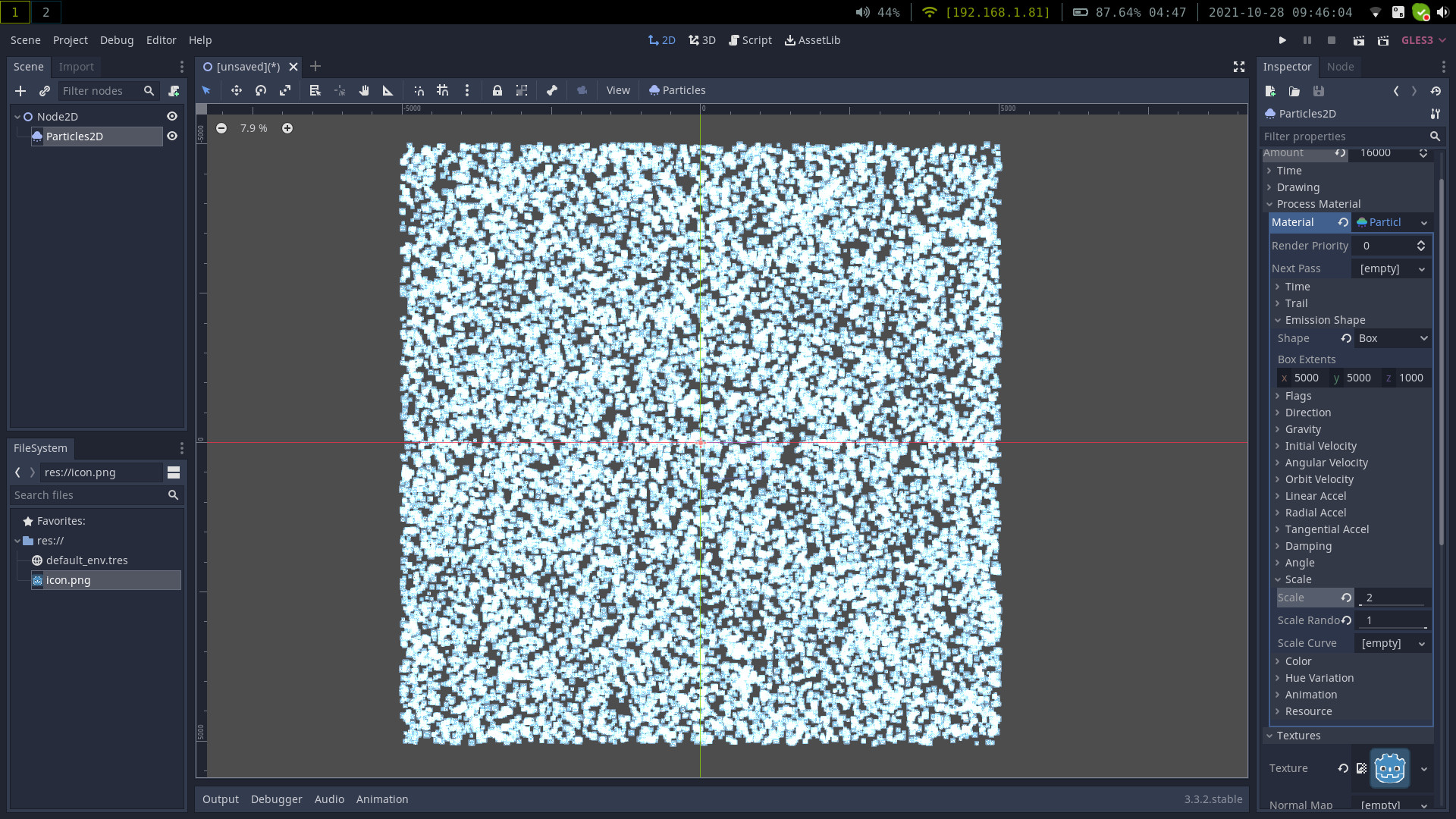

Imagine that if instead of rendering a uniform distribution of Godot logos I was instead rendering

an image of a brush texture following a curve read from a file. I would have something that looked

like a raster brush stroke, but the curve could be edited, the brush texture changed, and the pressure

response tweaked. We would also be able to zoom in until you could see the pixels of the brush texture

rather than have some arbitrary "canvas texture" limiting the detail.

Imagine that if instead of rendering a uniform distribution of Godot logos I was instead rendering

an image of a brush texture following a curve read from a file. I would have something that looked

like a raster brush stroke, but the curve could be edited, the brush texture changed, and the pressure

response tweaked. We would also be able to zoom in until you could see the pixels of the brush texture

rather than have some arbitrary "canvas texture" limiting the detail.

Now, 16,000 image splats may sound like a lot, but it's not. Drawing a couple strokes on my screen

with a stylus generates 2000-3000 control points. If we were to expect to render an entire image from

scratch each frame, we would quickly hit a performance bottleneck. Fortunately we don't have to be

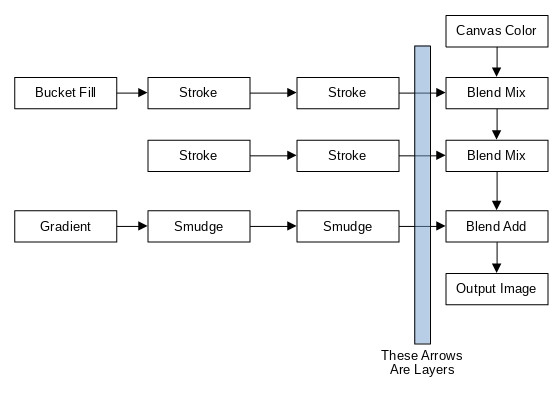

so naive. If we use a dependency graph we can only render the things that have changed. If we have a

dependency tree, it also gives us support for layers for free. Interestingly, a layer is a "tag" that

points at the tip operation just before compositing the "layers" together:

Using a depgraph also means that if you make a stroke on one layer, the other layers don't require any computation.

Using a depgraph also means that if you make a stroke on one layer, the other layers don't require any computation.

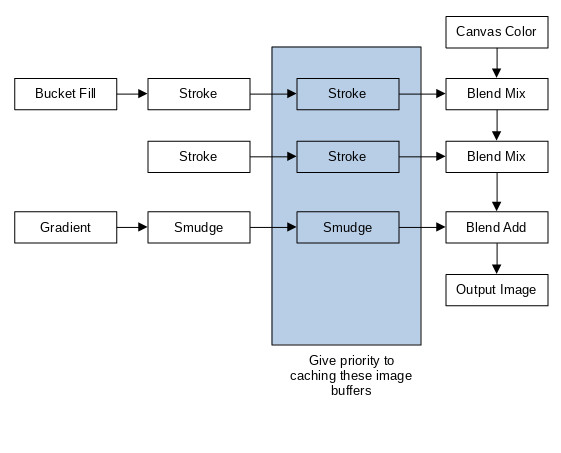

Despite computers now being a lot faster, they aren't all-powerful. If

we have a screen of 1920x1080 pixels, and it is four channels of 32 bit

floats, that comes to around 34Mb of uncompressed image data per

buffer. If we assume a VRAM limit of 2Gb, this is only 58 image

buffers. While this is enough to contain the entire image-graph shown

above, it clearly isn't enough to contain the thousands of strokes that

a typical painting contains. There are several possible solutions here.

One is to swap images out to main memory. Giving ourselves 2Gb of

system memory therefore doubles our number of buffers to ~120. Many of

them will compress pretty well (Eg bucket fill is all the same color, a

stroke will have lots of blank space), so if we have a lossless

compression system we may be able to drive this number up even higher.

But eventually there will be a limit. The solution is to use a clever

caching scheme and to discard some image buffers if we don't think

we'll need them. If we ensure that the images within a couple

operations from a layer's tip are stored, then any undo operations will

be fast, as will adding new strokes the top. The only thing that will

be slow is if we edit a very old stroke, which will require

regenerating all of them in the chain.

I suspect it would be a good plan to pre-allocate a set number of buffers

and then juggle them around. There'll be some algorithm for picking which

buffers to keep in order to maximize performance. An image (at least

the sort I do) is unlikely to have more than 20 layers, so 50 image

buffers in VRAM is enough to keep a couple spares lying around and stay

below our VRAM target.

I suspect it would be a good plan to pre-allocate a set number of buffers

and then juggle them around. There'll be some algorithm for picking which

buffers to keep in order to maximize performance. An image (at least

the sort I do) is unlikely to have more than 20 layers, so 50 image

buffers in VRAM is enough to keep a couple spares lying around and stay

below our VRAM target.

One downside of using a dependency graph is that any time the viewport is moved (eg while doing the painting) the entire graph will be invalidated. There is undoubtedly some clever trickery we could do with tiled rendering, but I haven't thought too much about the topic. Tiled rendering may also allow an "infinite" canvas.

So where do we go from here? There are three things to build:

- A file format to represent a scalable editable raster graphic

- A renderer to render that file format

- An image editor to allow them to be created

Also, did I mention that this whole thing came about because there are no touch friendly image editors for Linux? At least not that I could find. Gimp and Krita are great, but you need a keyboard to use them properly. This got me thinking about how to build my own image editor and .... well, my plan may have got a little bit larger than I would have liked.