In winter 2023 a friend and myself decide to have a shot at making a business selling AI generated T-Shirts. Turns out everyone was doing this, so we're glad we didn't invest any real money into it, but it was a fun learning experience for me about AI and just how fast this field is moving.

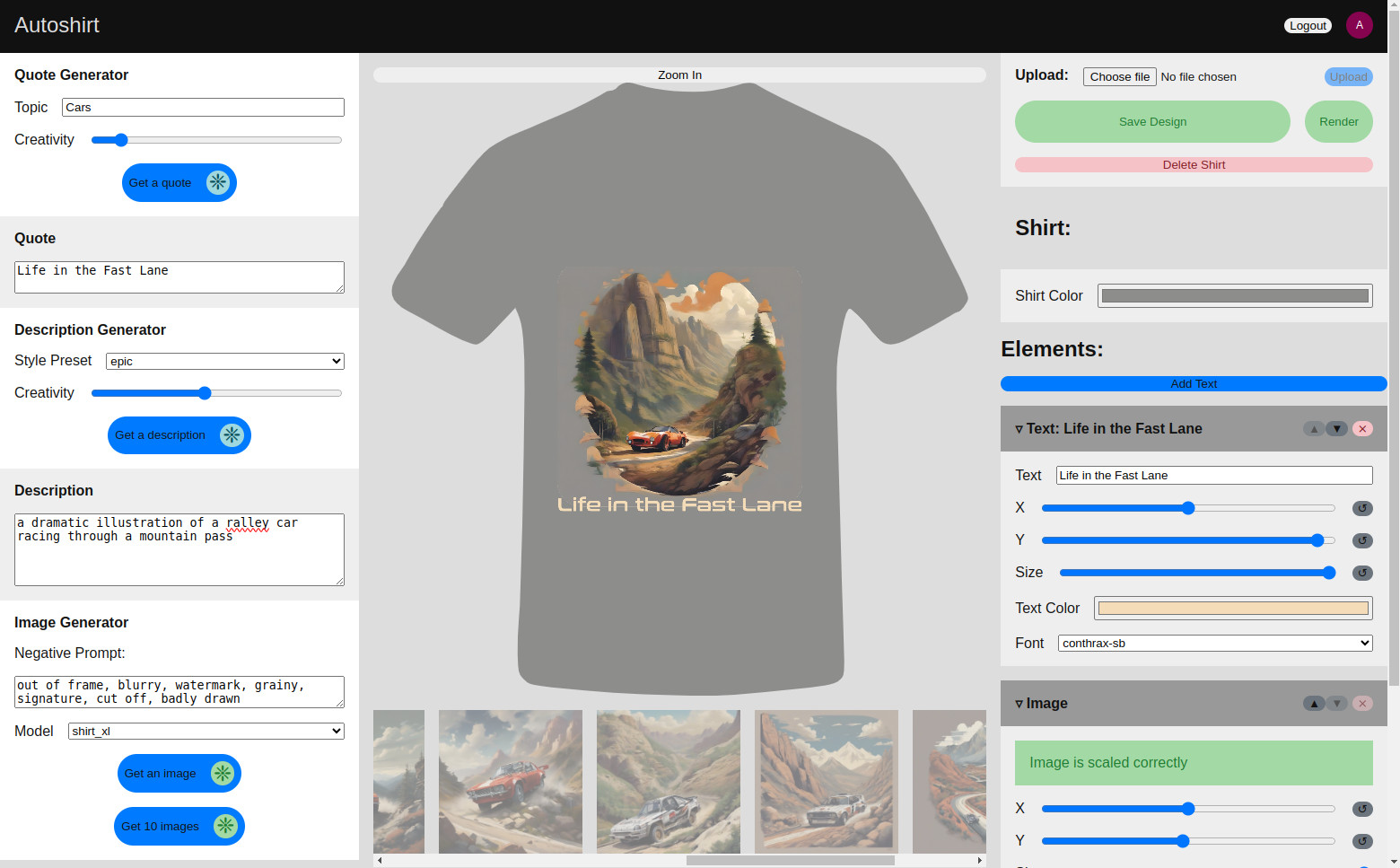

Our idea was simple: get an AI to generate thousands of shirts on a given topic. For example if you know it's christmas coming up, why not generate 10,000 christmas themed shirts? If you know people like cats and cars, why not get an AI to spit out thousands of shirts about that. I would hack together some softare, and he'd manage actually using it and uploading to online stores. What it came down to was a pipeline. On one end a user provides a topic (eg "cars"). From that a large language model can generate a quote (eg "life in the fast lane"), and an image description (eg "a dramatic illustration of a ralley car racing through a mountain pass"). An image generation model can then turn this into a bunch of potential images. These images are too low res for printing, but can be upscaled by another AI upscaler, before finally being uploaded to an online store. It looks like this:

Gah! I thought it was supposed to be automatic? Why such a big web UI? Well, when we started this project AI wasn't very good. The available LLM would frequently generate garbage (even with good prompt engineering), and Stable Diffusion 1.5 was very hit-and-miss. So it helped to have human review at every stage of the process. Over the two months or so that I spent hobby-time on this project that changed drastically. Llama2 came out, and Stable Diffusion Xl was released, and someone fine-tuned a shirt-specific-LORA. These improvements changed our hit-rate from one in a hundred being high enough quality to one in ten.

So anyway, we stuck 50 designs up on redbubble in a week of evenings, and sold exactly zero shirts. Problem was, everyone else was doing exactly the same thing. The amount of AI generated art on etsy, redbubble, ebay etc. was exploding. We could have outpaced them in quantity (our slowest part was uploading to the store), but not in quality.

Still, there's some tech behind the scenes

Infrastructure

Hardware

This whole lot ran on a desktop PC from home, and served (at peak) 4 concurrent users. Initially the machine just had an RTX 3070 Ti, which has 8GB of VRAM. This was just enough to hold either the stable diffusion model or the LLM. And there was a memory leak in llama-cpp-python whenever you loaded/unloaded the language model, so occasionally it would die. This led to me upgrading it, first the motherboard and power supply, and then chucking in an RTX 3060 (12Gb VRAM). This prevented the software from needing to multiplex the GPU and led to way better performance. This all sat behind like 3 layers of NAT, and various wifi links, so I took the easy way out and exposed it to the internet with ngrok.

Software

The frontend was done in vite and react, and I didn't bother to use any component library. I did end up writing a minimal shirt-layout tool in canvas, which was pretty fun. Other than that most of the work was in the backend.

The backend was surprisingly complete for what it was. There six containers all up!

- Ngrok. This exposed Vite to the web

- Vite. This hosted the frontend and handled routing to the other containers

- Main Server. This handled sessions and logins (all hand rolled and similar to the Network Detached Storage Device I built. It was quicker to implement some cryptography rather than rely on an external service provider). Yeah, storing passwords in your own DB isn't good at production scale, but for a small project like this where there is no PII to leak? Who cares if someone steals the DB! On that topic, the DB was an Sqlite DB mounted on a volume into this server. Super simple.

- Image Server. This handles image generation requests, and is assigned the RTX 3070

- Quote Server. This handles language model requests, and is assigned the RTX 3060

This all worked great! What was a real pain was uploading to redbubble. There is no API for it, so lots of clicking was involved. Generating a shirt took seconds. Uploading it took dozens of clicks and minutes of human time. Arrgh! And you can't control the default shirt color, change cover images and several other thing I would have expected to be easy. It may be that I'm missing something, but we found redbubble incredibly frustrating to use.